Axera Neutron NPU Adapts to Llama 3 and Phi-3 Models, Driving Widespread Adoption of Large AI Models

Background

The relentless progress of large AI model technology is fueling the intelligent transformation across diverse industries. Recently, Meta and Microsoft have successively released the groundbreaking Llama 3 and Phi-3 model series. The Llama 3 series includes 8B and 70B variants, while the Phi-3 series offers mini (3.8B), small (7B), and medium (14B) specifications. To provide developers with early access, Axera’s NPU toolchain team has swiftly responded by adapting its AX650N platform to the Llama 3 8B and Phi-3-mini models.

Llama 3

On April 19, 2024, Meta introduced its Meta Llama 3 series of large language models (LLMs), featuring an 8 billion (8B) model and a 70 billion (70B) model. In benchmark tests, the Llama 3 models performed exceptionally well, matching the practicality and safety evaluations of popular closed-source models on the market.

Official website: https://llama.meta.com/llama3

At the architectural level, Llama 3 has opted for a standard decoder-only Transformer architecture, utilizing a tokenizer with a 128K token vocabulary. Llama 3 was trained on over 15 terabytes of public data, with 5 percent being non-English content covering more than 30 languages. The training dataset is seven times larger than that of its predecessor, Llama 2.

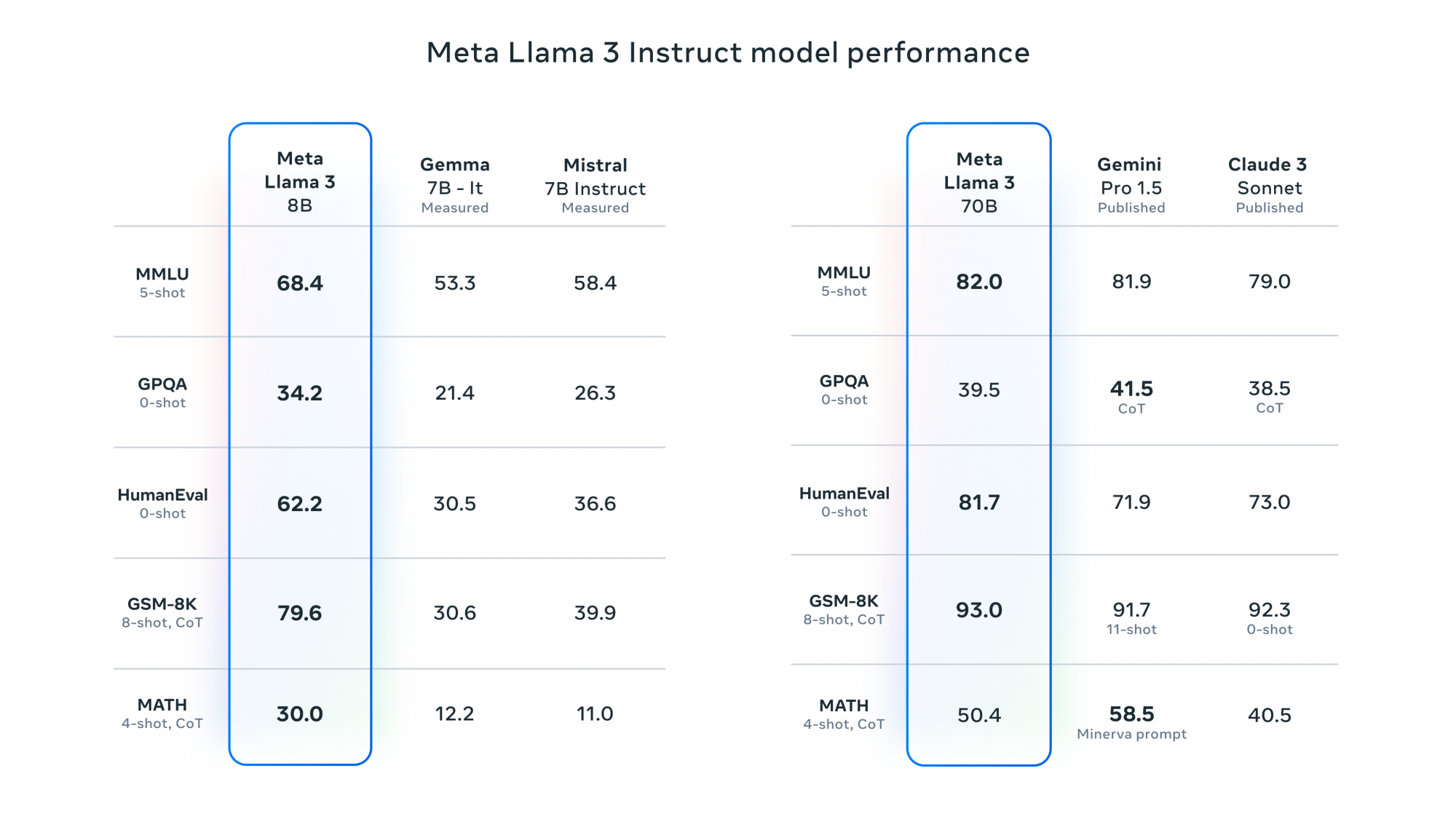

According to Meta’s test results, the Llama 3 8B model outperformed the Gemma 7B and Mistral 7B Instruct models across several performance benchmarks, including MMLU, GPQA, and HumanEval. The Llama 3 70B model surpassed the intermediate version of the renowned closed-source model Claude 3, named Sonnet, and achieved a three-to-two victory when compared to Google’s Gemini Pro 1.5.

Chip on Board Outcome

The AX650N has now adapted to the Int8 quantized version of Llama 3 8B. If Int4 quantization is applied, the token throughput per second could double, effectively supporting normal human-computer interaction.

Phi-3

Shortly after the release of Llama 3, competitors quickly emerged with lightweight models capable of running on mobile devices.

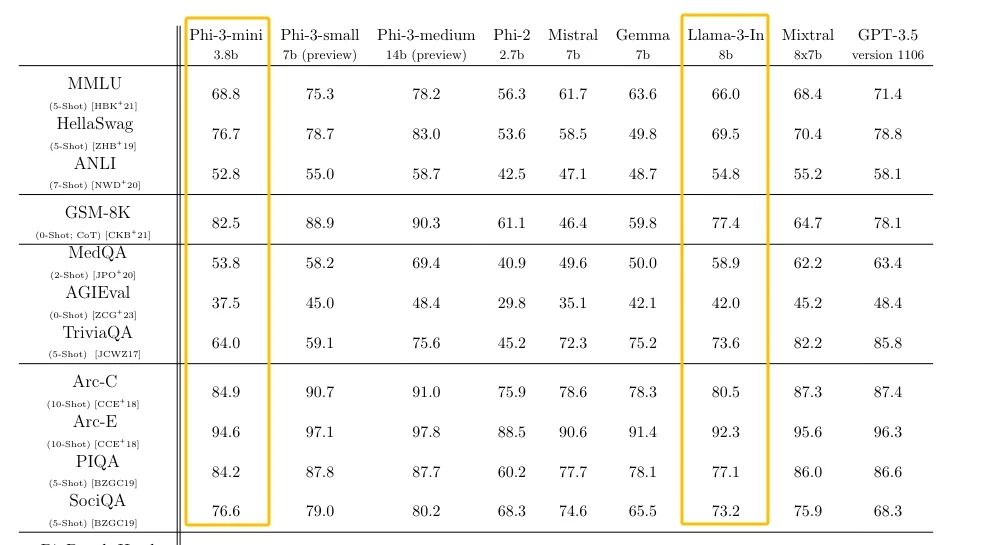

On April 23, 2024, Microsoft released its self-developed small-sized model Phi-3. Although Phi-3-mini has been optimized for deployment on mobile phones, its performance can rival models such as Mixtral 8x7B and GPT-3.5. Microsoft stated that the key innovation lies in using a higher-quality training dataset.

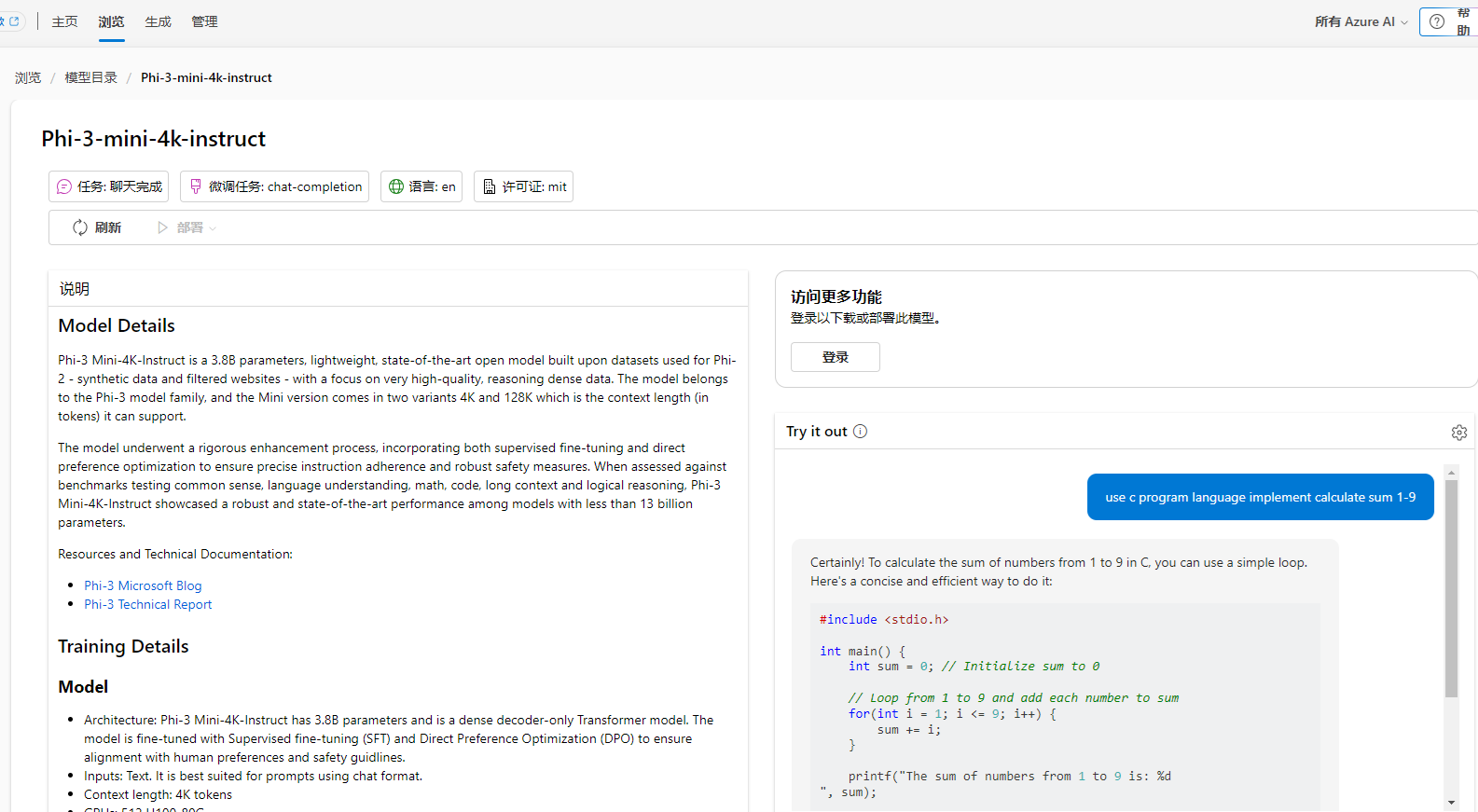

Online DEMO:

https://ai.azure.com/explore/models/Phi-3-mini-4k-instruct/version/2/registry/azureml

Chip on Board Outcome

The AX650N has now completed adaptation for the Int8 quantized version of Phi-3-mini, effectively supporting normal human-computer interaction.

Other Outcomes

The AX650N’s swift adaptation to Llama 3 and Phi-3 can be attributed to the team's behind-the-scenes completion of support and optimization for LLMs in the existing NPU toolchain earlier this year. In addition to Llama 3, adaptations for other mainstream open-source LLMs have been completed so far, including Llama 2, TinyLlama, Phi-2, Qwen1.5, and ChatGLM3.

The related outcomes have been released to the developer community (https://github.com/AXERA-TECH/ax-llm), inviting enthusiasts to explore and experiment.

Follow-up plan

The year 2024 marks the inaugural year of AIPC, and we will be offering more solutions for common applications in AIPC. Leveraging the high energy efficiency of the Axera Neutron NPU, we enable cost-effective local deployment of various exciting LLMs, making large models affordable for everyone. This deeply embodies our commitment to the mission “AI for All, AI for a Better World.”